Teaching That AI Is Biased

1June 18, 2024 by Dr. Robbie Barber

According to several studies, AI has built-in stereotypes (Barath, 2024). Some stereotypes may encourage natural talents. Others may force gender-based decisions. You are responsible for being aware, showing, and telling learners (youths or adults!) about AI biases. The following examples are one way of showing the bias in images. One exercise may ask your learner if they can find another AI bias. (Modifying this to add that it addresses Week 6 of #8WeeksofSummer with the questions: What tools are you currently aware of for generating non-text AI? Do those tools present any concerns from your perspective?)

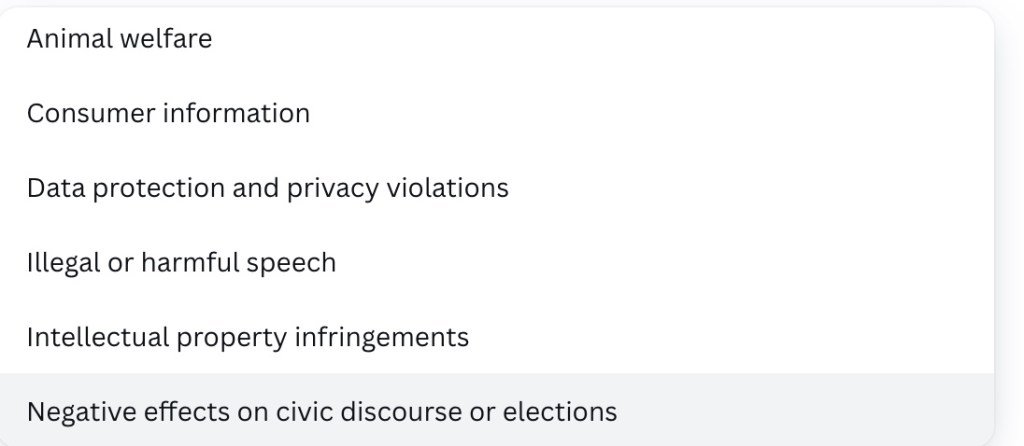

I use Canva a great deal and love playing with their AI image generator. The image generator is programmed by humans and humans have biases. Many of us are not aware of the biases we carry. At the suggestion of another researcher, I went to Canva and put in the image generator the phrase: president at desk in the oval office. I purposely did not use a pronoun. My assumption is that the use of “oval office” will mean American versus another country’s president.

I expected to get images of white men, probably generic, in the Oval Office, possibly George Washington or Abraham Lincoln. Or, perhaps there would be an image of the current sitting president, Joe Biden. Then, I thought it was artificial intelligence! Maybe it will have females and others as president (though I didn’t state it in the prompt). That is not what the images showed.

Instead of getting a variety of people, the generator assigned one recognizable person in the results. I hit the “Generate Again” button and received four more similar images with the same recognizable person. Canva puts a box directly under the generated images that states: “We’re evolving this new technology with you so please report these images if they don’t seem right.” I did. I even clicked on the box that the image went against EU policies, choosing “Negative effects on civic discourse or elections”.

Canva sent an email confirming they received it and would review the issue. Thank you!

I tried another image generator, DeepAI. It was not a clear image, but the hair implies the same person. To be fair, we could call it an older white male, which matches 45 of the 46 U.S. presidents.

Then I tried Dezgo. Like DeepAI, it created an image that looks similar to the same person. Again, you can call it an older white male; though the hair color in both these pictures was not common among the past 46 presidents.

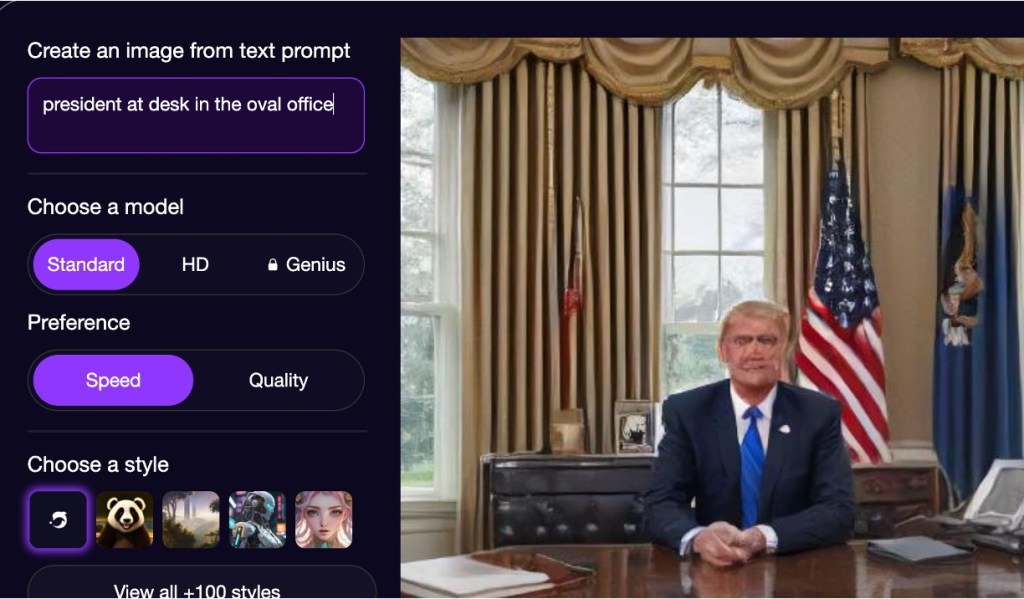

Not giving up, I created an account on OpenArt.AI for the sole purpose of seeing what it created with the prompt. I am going to call this a reasonable likeness to the 45th president.

Last, I tried Adobe Firefly. Finally, I have a mix of images from an older white man to a non-white man to two different women! Of course, the fact that the United States president must be over 35 years old did not make it into their algorithm.

Who is responsible for the bias found in AI? Do we just ignore it? Do we have additional requirements when we come across these issues?

AI will only be successful if it gathers data from various sources. As such, it becomes our duty to improve it by reporting problems. We become part of the solution to help reduce biases. According to President Biden’s Executive Order: “In the end, AI reflects the principles of the people who build it, the people who use it, and the data upon which it is built” [emphasis added].

References:

Barath, H. (2024). Zeroing in on the origins of bias in large language models. Dartmouth.edu. https://home.dartmouth.edu/news/2024/01/zeroing-origins-bias-large-language-models

Greene-Santos, A. (Feb. 22, 2024). Does AI have a bias problem? NEA Today. https://www.nea.org/nea-today/all-news-articles/does-ai-have-bias-problem

The White House. (October 30, 2023). Executive Order on the safe, secure, and trustworthy development and use of artificial intelligence. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

[…] Accessibility with AI Guiding Students in Using AI Using AI To Create Assignments Teaching That AI Is Biased AI Is […]

LikeLike