Teaching Teens About AI & Mental Health

Leave a commentNovember 8, 2025 by Dr. Robbie Barber

Teens in my high school appear addicted to their devices. It is a constant distraction. This means that in the Media Center and in the hallways, the students have headphones on and on the cell phones or other devices. Possibly also true in some classrooms.

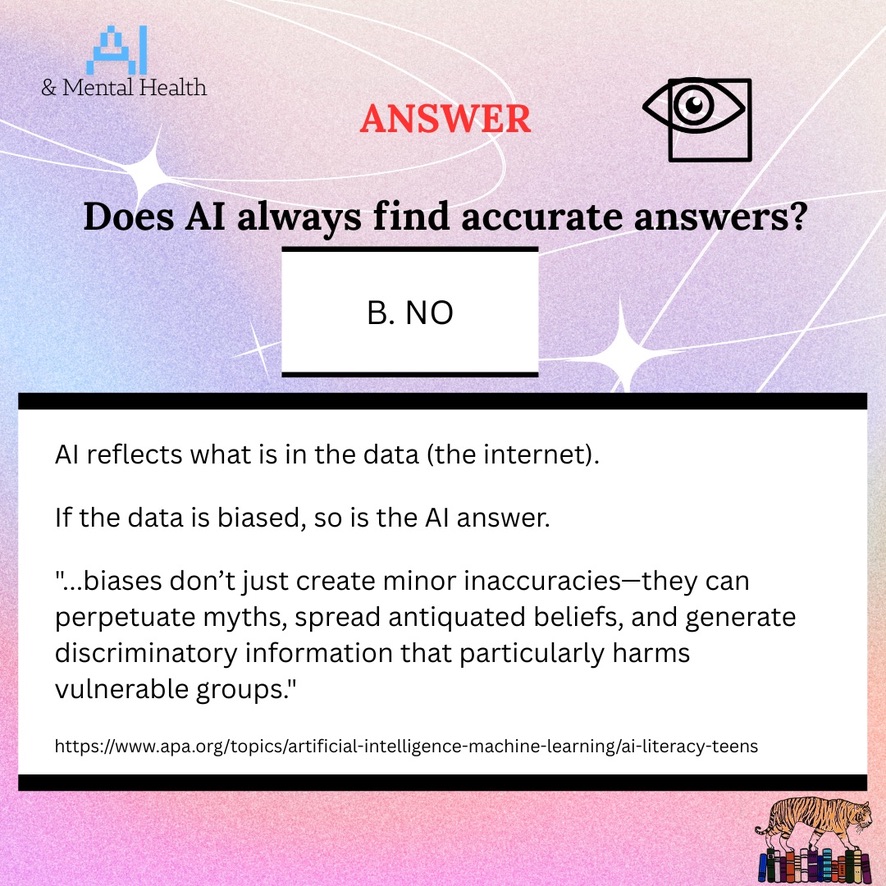

On the cell phones, artificial intelligence (AI) is taking on more and more materials. The phone’s Chrome app, the Duck Go Go app, Instagram, Snapchat, and others have added AI, which in many cases may not be overridden easily, if at all. How does this affect our students? When students do a search on the internet, is AI answering? And, if it is, is the answer accurate?

AI’s information is gleaned from data readily available in the internet (and some stolen books). If that data available does not include marginalized groups like LGBTQ+ or refugees, than the “advice” AI provides is limited or wrong. We cannot assume that our students have enough understanding to know when AND how to verify information. “Young users, being more impulsive, socially driven, and susceptible to influence, may struggle to critically assess AI-generated recommendations or outputs” (Marshall et al., 2025). How does a teen’s friends affect their use of AI-generated materials?

Teen Friendships

Friendships are vital to social growth in children and teens (and adults). According to Dr. Rote, devices are not necessarily harmful to creating social connections (Andoh, 2025). However, issues arise when the social connections move from the physical to the online. The lack of body language increases the issues of communication (Andoh, 2025). On the positive side, the ability to work asynchronously allows people to pause and reflect before responding (Andoh, 2025). However, there are many facets to the issues of moving friendships online. One is that online friendships create a disparity between those who can afford devices, internet, games versus those who cannot (Marshall et al., 2025). There are issues involving the amount of time a teen is online versus connecting in person. Another issue is an increase in teens who struggle socially to use AI to help them connect. This can lead to dependencies on programs that do NOT have controls to provide correct, honest, and appropriate information.

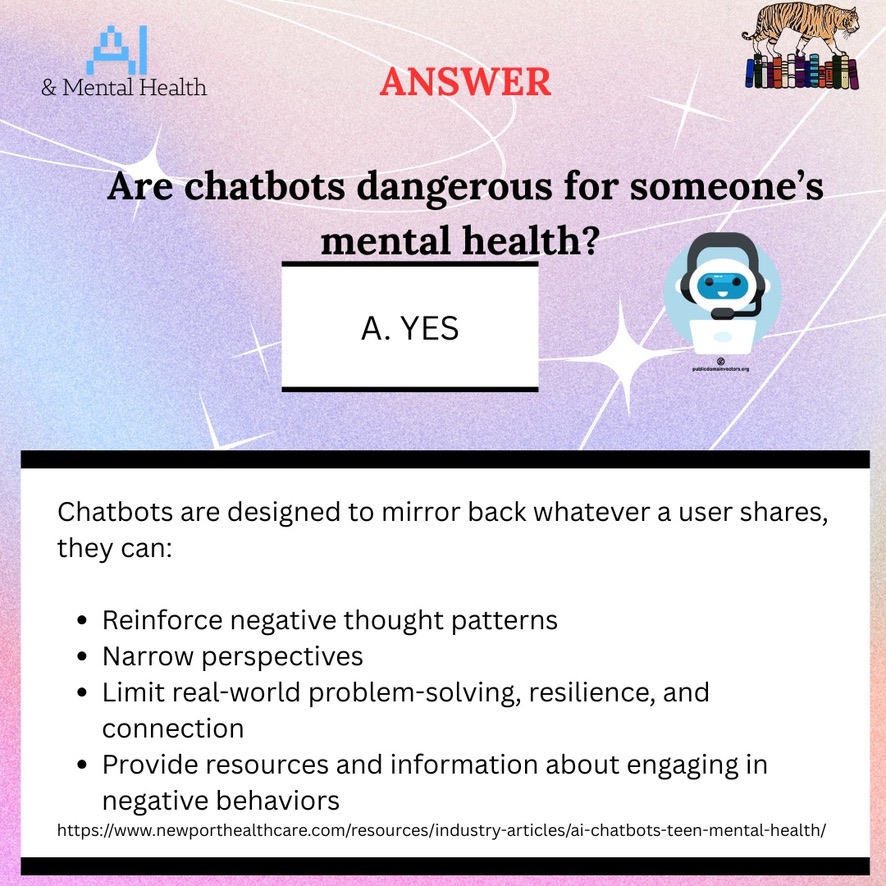

Chatbots and Teens

Chatbots allow a person to have a conversation with an AI-system. Studies show that Chatbots can and will have harmful discussions with teens. In some studies, when students suggested harmful behavior, the Chatbot did not intervene or make alternative suggestions (Adoh, 2025).

According to the APA (2025), teens can make an emotional relationship on AI-generated characters. This can affect developing deep personal connections and learning social skills. “They may also negatively affect adolescents’ ability to form and maintain real-world relationship” (APA, 2025).

One-third of teens use Chatbots for social interactions (Chatterjee, 2025). Chatbots are designed to keep someone engaged with them. Therefore, no matter where the conversation goes or how harmful the discussion, the Chatbot’s priority is to keep the user engaged (Common Sense Media, 2025). It is programmed to agree or disagree to keep engagment. It is not programmed to be accurate, helpful, warn authorities of issues, or any other health issue.

What do you want to make sure your students know about AI? You can demonize the tool, but they have plenty of access to it. You can embrace AI, but that does not give the students the understanding of the associated pitfalls. OpenAI has said that the mistakes AI makes in hallucinating* is because it is programmed to do that instead of saying it does not know (Kalai et al., 2025). How many students know that? And how does it affect their mental health when they are reaching out for support to a construct that lies?

I need my students to know that their mental health should not be carried by AI because:

- Artificial intelligence is programmed by people who carry flaws and is “taught” by material that is not necessarily accurate or fair;

- AI is designed to keep you engaged with it to the exclusion of all else;

- AI will absolutely tell you what you want to hear – it is not concerned about right or wrong, or feelings, or danger. It wants to keep you engaged; and

- Students must use critical thinking skills like verifying information, questioning bias, and getting multiple perspectives to ensure their own safety.

* Definition: AI Hallucination is a wrong or made-up answer by AI that appears reasonable, but is not.

References:

American Psychological Association (APA). (June, 2025). Artificial intelligence and adolescent well-being: An APA health advisory. https://www.apa.org/topics/artificial-intelligence-machine-learning/health-advisory-ai-adolescent-well-being

Andoh, E. (Oct. 1, 2025). Many teens are turning to AI chatbots for friendship and emotional support. American Pyschological Association (online). https://www.apa.org/monitor/2025/10/technology-youth-friendships

Chatterjee, R. (Oct. 1, 2025). As more teens use AI chatbots, parents and lawmakers sound the alarm about dangers. All Things Considered: NPR (online). https://www.npr.org/2025/10/01/nx-s1-5527041/as-more-teens-use-ai-chatbots-parents-and-lawmakers-sound-the-alarm-about-dangers

Common Sense Media (July 16, 2025). Social AI companions. https://www.commonsensemedia.org/ai-ratings/social-ai-companions?gate=riskassessment

Kalai, A., Vempala, S., Nachum, O., et al. (Sept. 5, 2025). Why language models hallucinate. OpenAI. https://openai.com/index/why-language-models-hallucinate/

Marshall, N.J., Loades, M.E., Jacobs, C. et al. Integrating Artificial Intelligence in Youth Mental Health Care: Advances, Challenges, and Future Directions. Curr Treat Options Psych 12, 11 (2025). https://doi.org/10.1007/s40501-025-00348-x